Remote IoT Batch Jobs: Explained + Examples & How-To Guide

Are you ready to unlock the true potential of your remote IoT deployments? Remote IoT batch jobs are the unsung heroes of the connected world, enabling the seamless automation and efficient analysis of vast data streams generated by your devices.

The very essence of progress in our increasingly interconnected world lies in the ability to extract meaningful insights from the relentless flow of data. Remote IoT batch job processing is a crucial element within this paradigm, offering a powerful methodology for transforming raw information into actionable intelligence. It is the crucial process that unlocks the door to efficient, automated data processing, and streamlined operations within the realm of Internet of Things (IoT).

Let's delve deeper into the intricacies of remote IoT batch jobs. At its core, a remote IoT batch job is a meticulously orchestrated process. It involves the collection, organization, and comprehensive analysis of data, primarily in bulk. Think of it as a well-oiled machine, methodically sifting through a mountain of information to provide actionable insights. As IoT systems grow and evolve, the need to efficiently process large volumes of data becomes paramount. Remote IoT batch jobs provide precisely this capability, ensuring that the deluge of data generated by connected devices is managed effectively.

Key Architectures and Technologies Used in Remote IoT Batch Jobs

To further illustrate the concept, let us present a table, this will provides a detailed view of key architectures and technologies, this will allow to visualize the various components of remote IoT batch job.

| Component | Description | Technologies/Tools | Example Use Cases |

|---|---|---|---|

| Data Collection | The initial phase of remote IoT batch processing, gathering raw data from various IoT devices. This involves setting up data pipelines and ensuring secure transmission. | IoT Device SDKs, MQTT, HTTP, WebSockets, Secure protocols(TLS/SSL) | Sensors collecting temperature readings, RFID readers capturing inventory data, GPS units tracking vehicle positions. |

| Data Ingestion | Bringing data collected from IoT devices into a central system for processing. It is crucial that the system is reliable and scalable to handle the influx of data. | Message queues, Kafka, AWS IoT Core, Azure IoT Hub, Google Cloud IoT Core. | Receiving sensor readings, transmitting GPS data, or aggregating log files from multiple devices. |

| Data Storage | Storing the ingested data in a manner that is structured, accessible, and facilitates analysis. This is essential for long-term data retention and retrieval. | Cloud storage (AWS S3, Azure Blob Storage, Google Cloud Storage), Databases (SQL, NoSQL), Time-series databases (InfluxDB, TimescaleDB). | Storing historical sensor data, maintaining a database of device configurations, and archiving operational logs. |

| Data Transformation | The step where the raw data is cleansed, transformed, and prepared for analysis. This could involve data cleaning, aggregation, filtering, and format conversion. | Data processing frameworks (Apache Spark, Apache Flink), Scripting languages (Python, Scala, Java), ETL tools (AWS Glue, Azure Data Factory, Google Cloud Dataflow) | Cleaning noisy sensor data, aggregating hourly readings into daily summaries, filtering out anomalies, or converting data formats. |

| Data Analysis | The process of examining transformed data to derive meaningful insights, patterns, and trends. This is where value is extracted from the data. | Machine learning models, Statistical analysis tools, Data visualization platforms (Tableau, Power BI, Grafana), and Data mining techniques. | Predicting equipment failures, identifying anomalies in sensor data, and optimizing operational efficiency. |

| Job Scheduling and Orchestration | Managing the execution sequence and timing of different batch jobs. This ensures that processes run in the correct order, at the right time, and with the required resources. | Workflow management tools (Apache Airflow, AWS Step Functions, Azure Logic Apps), Cron jobs, and Job schedulers. | Automating data processing tasks at regular intervals, scheduling data backups, and coordinating data processing pipelines. |

| Monitoring and Alerting | Tracking the performance, status, and health of the entire batch processing pipeline. This allows for proactive issue detection and swift resolution. | Monitoring tools (Prometheus, Grafana, Datadog), Alerting systems (PagerDuty, Slack integration), and Logging. | Monitoring job execution status, identifying data processing errors, and generating alerts when performance thresholds are exceeded. |

| Security and Access Control | Implementing security measures to protect data integrity, prevent unauthorized access, and ensure compliance with regulatory requirements. | Encryption, Access control lists (ACLs), Authentication and authorization, and Data masking techniques. | Securing data transmission, restricting access to sensitive information, and ensuring data privacy. |

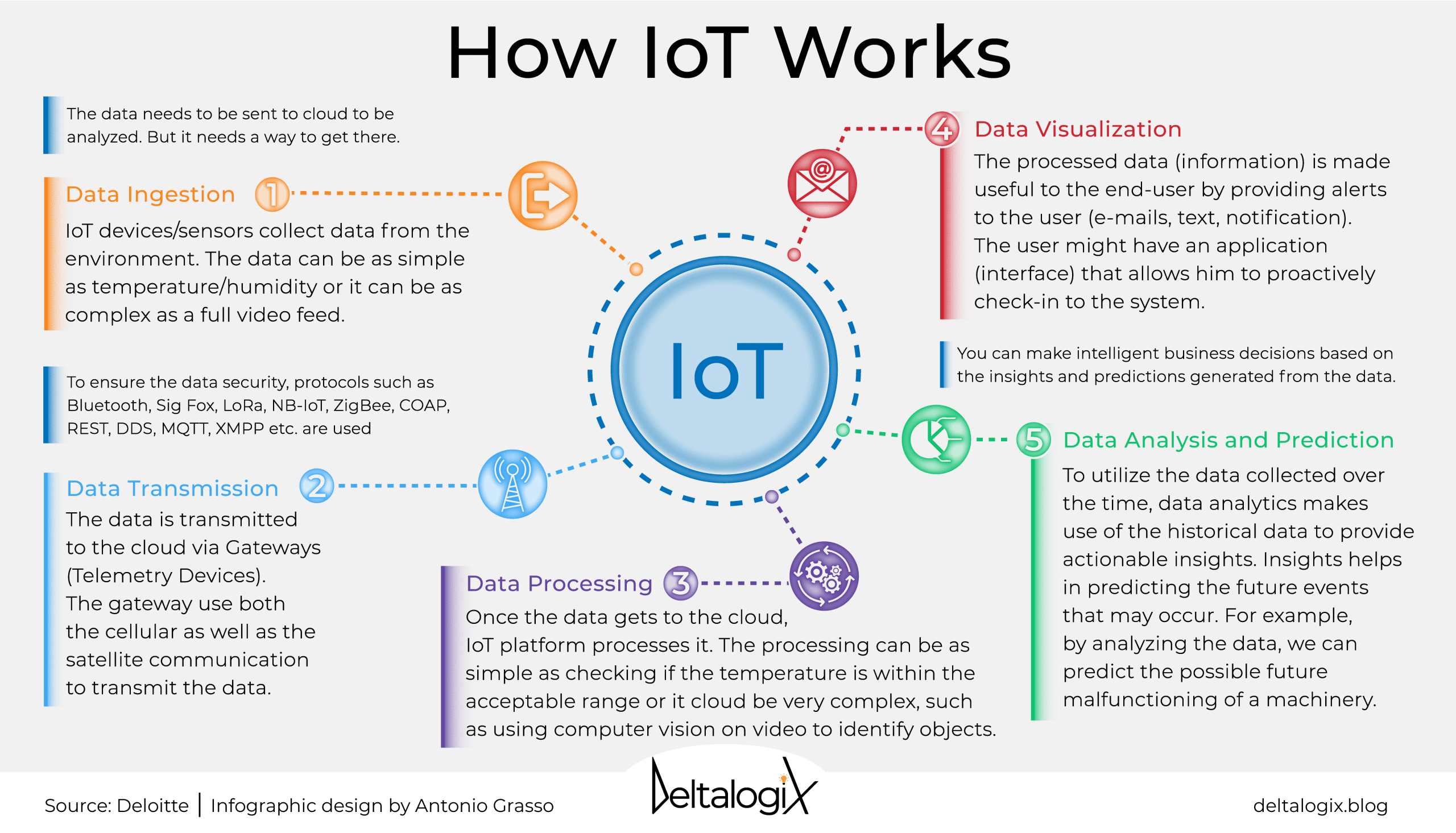

The architecture of remote IoT batch jobs is often built around several key components. The initial step, as mentioned earlier, is data collection. This involves gathering data from various IoT devices. This may involve a variety of protocols, including MQTT, HTTP, and WebSockets, as well as secure protocols like TLS/SSL.

Data ingestion follows the collection phase, bringing the data into a central system. This requires robust and scalable infrastructure. Popular technologies for this include message queues (such as Kafka), and cloud-based IoT platforms like AWS IoT Core, Azure IoT Hub, and Google Cloud IoT Core. After ingestion, data storage becomes vital. This could use various forms, ranging from cloud object storage (AWS S3, Azure Blob Storage) to databases (SQL, NoSQL) or time-series databases.

Data transformation involves cleaning, transforming, and preparing the data for analysis, and often requires specialized tools and technologies. After transformation, data analysis takes place, where meaningful insights are derived. Job scheduling and orchestration tools are employed to manage the sequence and timing of these jobs.

AWS (Amazon Web Services) is a strong example of how such a platform is constructed. Within this system, you might utilize EC2 instances (for processing power), Lambda functions (for serverless execution), and IoT Core (for device connectivity and management). Similar architectures can be found in other cloud platforms like Azure (with IoT Hub, Data Factory, and Azure Functions) and Google Cloud (using Cloud IoT Core, Cloud Dataflow, and Cloud Functions). These services provide the necessary compute, storage, and management capabilities.

One of the fundamental aspects of implementing a remote IoT batch job is the ability to create and run a job. Many cloud services, such as AWS IoT, offer a job wizard that simplifies the process. You define the job, setting parameters, and specifying the devices or device groups the job will target. For example, a job could be set to adjust the light threshold for a set of devices, ensuring proper operational behavior. These jobs can also be saved to be run later, adding flexibility for scheduling and automation. The functionality is typically integrated into the cloud platform.

The application of remote IoT batch jobs is broad, touching numerous industries. In industrial IoT, these jobs play a critical role in quality control, enabling predictive maintenance, and optimizing supply chain operations. Retailers leverage them to analyze customer behavior and to fine-tune inventory management, enhancing efficiency and profitability.

As IoT systems become more extensive, the benefits become more pronounced. Businesses are utilizing remote IoT batch job examples to streamline operations, reduce costs, and achieve greater efficiency. The ability to process vast volumes of data simultaneously is becoming a cornerstone of modern data management strategies, enhancing the value from IoT investments.

For those seeking a deeper understanding and practical application, here are some more considerations to help with effective implementation:

- Data Volume and Velocity: Analyze the volume and velocity of data generated by your IoT devices. This information will guide the selection of the right infrastructure.

- Scalability: Design the system to scale effectively, to deal with future data growth and an increasing number of connected devices.

- Security: Protect the data with encryption, authentication, and authorization.

- Cost Optimization: Implement cost-effective data storage and processing solutions. Monitor usage and costs regularly.

- Monitoring and Alerting: Establish monitoring to track performance and set up alerting.

In summary, remote IoT batch jobs are an essential part of any modern IoT solution.

For more information, you can visit the AWS IoT official website.